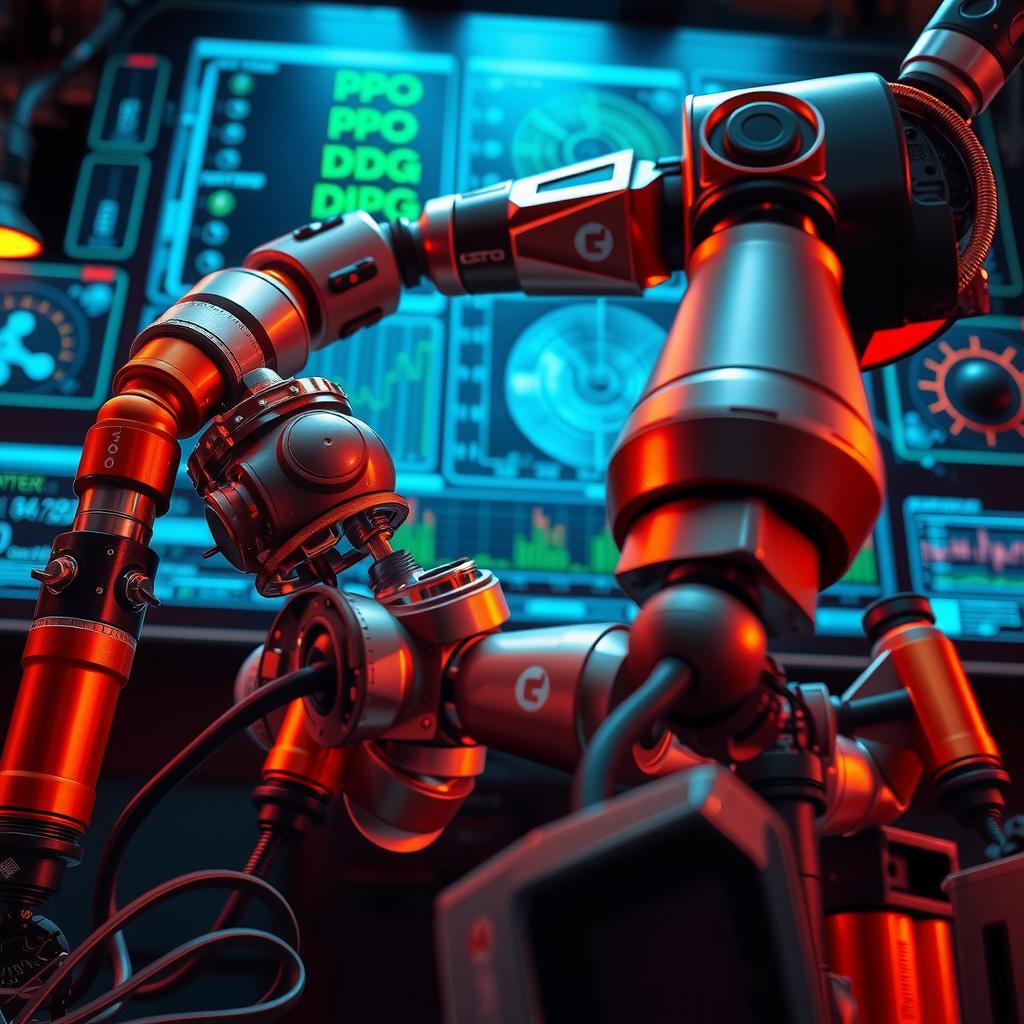

In the rapidly evolving field of robotics, achieving efficient and precise control remains one of the most significant challenges. As robots are increasingly deployed in diverse applications, from industrial automation to personal assistance, the choice of algorithms for reinforcement learning becomes crucial. Among these algorithms, Proximal Policy Optimization (PPO) and Deep Deterministic Policy Gradient (DDPG) have emerged as prominent contenders for tackling complex robotic control tasks. But how do these two approaches stack up against each other? This question forms the crux of a comparative study that aims to shed light on their respective strengths and weaknesses.

Understanding which algorithm performs better under various conditions can significantly impact not only academic research but also practical implementations in real-world scenarios. The core value of this article lies in its comprehensive analysis that evaluates PPO and DDPG based on performance metrics tailored specifically for robotic control tasks. By dissecting their operational mechanisms, adaptability to different environments, and efficiency in learning policies over time, readers will gain insights into which method might be more suitable depending on specific requirements.

Through this examination, valuable lessons can be drawn regarding how these reinforcement learning strategies interact with dynamic systems inherent in robotics. The results promise to provide clarity amidst a landscape filled with technical jargon and complex decision-making processes prevalent within algorithm comparisons today. Furthermore, by delving into case studies involving both PPO and DDPG across various control scenarios—such as balancing robots or navigating obstacles—the article sets out not just to inform but also to engage practitioners looking for optimal solutions.

As we navigate through this comparative study between PPO and DDPG, it becomes essential to understand not only their theoretical foundations but also how they perform when put into practice within intricate robotic frameworks. This exploration offers an opportunity for enthusiasts—whether they are researchers or industry professionals—to align their projects with the right algorithmic approach while fostering innovation in robotic technology overall. Join us as we unravel the complexities behind these two powerful reinforcement learning models!

Key points:

-

Algorithm Methodologies: Understanding the methodologies of PPO and DDPG is crucial for practitioners in robotic control. While both algorithms fall under the umbrella of reinforcement learning, they operate differently. PPO (Proximal Policy Optimization) utilizes a clipped objective function which enhances stability during training, making it suitable for environments where sample efficiency is paramount. On the other hand, DDPG (Deep Deterministic Policy Gradient), being an off-policy algorithm designed for continuous action spaces, excels in scenarios requiring fine-grained control but can struggle with stability if not properly tuned. This comparative study illuminates these differences and aids practitioners in selecting the right approach based on their specific control tasks.

-

Performance Evaluation Criteria: The effectiveness of any reinforcement learning approach hinges on rigorous performance evaluation criteria. In this context, comparing how well PPO and DDPG perform across various robotic environments reveals critical insights into their strengths and weaknesses. For instance, while empirical data indicates that PPO often provides more reliable convergence properties and ease of tuning due to its stable updates, DDPG may demonstrate superior performance when dealing with high-dimensional action spaces typical in advanced robotic applications. By systematically assessing these algorithms through controlled experiments within multiple settings, readers will be better equipped to choose between them based on task requirements.

-

Implementation Best Practices: Implementing either algorithm effectively requires awareness of best practices tailored to maximize outcomes from both approaches—specifically focusing on how each performs under diverse conditions encountered in practical robotics applications. Practitioners must consider factors such as exploration strategies inherent to PPO versus DDPG’s deterministic nature when designing their systems. Additionally, understanding memory management techniques relevant for DDPG or leveraging adaptive learning rates with PPO can significantly influence training dynamics and overall success rates within complex control tasks.

Through this comparative analysis focused on PPO, DDPG, and their application within robotic control solutions, stakeholders are empowered with actionable knowledge that informs their decision-making processes surrounding reinforcement learning strategies tailored to achieve optimal results.

Introduction: The Role of Reinforcement Learning in Robotics

Understanding the Foundations of Robotic Control

In recent years, reinforcement learning (RL) has emerged as a pivotal methodology for advancing robotic control systems. As robotics continues to evolve in complexity and capability, the necessity for robust algorithms that can learn and adapt in dynamic environments becomes increasingly critical. Among various RL techniques, two algorithms—Proximal Policy Optimization (PPO) and Deep Deterministic Policy Gradient (DDPG)—stand out due to their distinct approaches toward handling continuous action spaces. This comparative analysis aims to elucidate the performance variances between these algorithms within diverse robotic control tasks, thereby providing insights into their applicability across different scenarios.

The significance of reinforcement learning in robotics is underscored by its ability to enable robots to make decisions based on trial-and-error experiences rather than relying solely on pre-programmed behaviors. This adaptability allows robots to optimize their actions over time, making them more effective at performing complex tasks such as manipulation or navigation. However, with numerous RL strategies available today, choosing the right algorithm necessitates an informed evaluation process; thus arises the importance of comparing PPO and DDPG.

Both PPO and DDPG have unique strengths that can make them preferable under certain conditions. For instance, while PPO is renowned for its stability during training—often leading to faster convergence rates—it may not always excel in high-dimensional action spaces where DDPG might demonstrate superior performance through off-policy learning capabilities. Such distinctions warrant thorough exploration since they directly impact how effectively a robot can be trained for specific tasks like autonomous driving or robotic arm manipulation.

Furthermore, understanding how each algorithm performs under varying reward structures is crucial when considering deployment options in real-world applications. A comparative study focusing on metrics such as sample efficiency and final policy performance will yield valuable insights not only into which algorithm might perform better but also why it does so from a theoretical standpoint grounded in reinforcement learning principles.

As researchers continue delving into this domain, establishing clear benchmarks through rigorous testing will serve both academia and industry alike by guiding future developments within robotic technologies. By systematically evaluating PPO against DDPG, one gains clarity on the nuanced differences that could influence decision-making processes regarding optimal control strategies tailored specifically for complex robotic operations.

In conclusion, embracing a detailed examination of these prominent reinforcement learning frameworks facilitates a deeper understanding of their implications within robotics—a field poised at the intersection of innovation and practical application where intelligent decision-making ultimately defines success.

Algorithmic Framework: Understanding PPO and DDPG

An In-Depth Look at Reinforcement Learning Algorithms

In the field of reinforcement learning, two prominent algorithms are Proximal Policy Optimization (PPO) and Deep Deterministic Policy Gradient (DDPG). Each algorithm offers unique mechanics, methodologies, strengths, and weaknesses that cater to various control tasks in robotic systems. PPO operates on a policy gradient framework that emphasizes stable updates through clipped objective functions. This stability is crucial for avoiding drastic changes during training, which can lead to performance degradation. On the other hand, DDPG, designed for continuous action spaces, employs an actor-critic method combining both a policy network (actor) and a value network (critic). This dual approach allows DDPG to learn more effectively from high-dimensional inputs but can suffer from issues like overestimation bias.

Strengths and Weaknesses of PPO

The strength of PPO lies in its simplicity and effectiveness across diverse environments. Its ability to maintain stable learning despite being relatively easy to implement makes it an appealing choice for practitioners. Furthermore, PPO’s reliance on clipping helps ensure consistent policy updates without excessive variance—an advantage when dealing with complex tasks requiring reliable performance evaluations. However, this stability comes at a cost; compared to DDPG, PPO often requires more sample efficiency due to its less aggressive exploration strategies. As such, while PPO excels in scenarios where robustness is paramount, it may lag behind DDPG when rapid adaptation or exploration is necessary.

Performance Evaluation through Comparative Study

When conducting a comparative study between these two algorithms within specific control tasks—such as those encountered in robotics—the differences become particularly pronounced. For instance, experiments have shown that while DDPG typically outperforms PPO in continuous action environments by achieving higher reward rates faster due to its targeted learning process via experience replay buffers and deterministic policies; it also faces challenges related to convergence stability under certain conditions. Conversely, PPO, although slower initially in some settings due to its conservative nature regarding updates could ultimately provide better long-term generalization across varied tasks once adequately trained. The nuances involved highlight the importance of context when selecting between PPO or DDPG for reinforcement learning applications—a decision best informed by understanding each algorithm’s inherent characteristics relative to specific objectives within robotic control paradigms.

Task-Specific Considerations in Algorithm Selection

Understanding PPO and DDPG for Enhanced Decision Making

In the realm of reinforcement learning, choosing between PPO (Proximal Policy Optimization) and DDPG (Deep Deterministic Policy Gradient) requires a nuanced understanding of how these algorithms align with specific task requirements. Practitioners often encounter scenarios where empirical findings indicate a stark contrast in performance based on the nature of control tasks. For instance, when dealing with high-dimensional action spaces common in robotic control applications, DDPG has shown superior effectiveness due to its ability to handle continuous actions effectively. This advantage is particularly pronounced in environments requiring fine motor skills or intricate maneuvers, making it an ideal choice for robotic arms or autonomous vehicles.

Conversely, PPO excels in discrete action settings where clear-cut choices are prevalent. Its clipped objective function promotes stability during training while allowing for adaptive behaviors that can explore diverse strategies within complex environments. The robustness offered by PPO, paired with its sample efficiency, makes it suitable for tasks involving safety-critical operations or where computational resources are constrained. Furthermore, comparative studies reveal that practitioners favor using PPO when algorithm interpretability and ease of tuning become paramount concerns; its design allows easier adjustments compared to the complexity inherent in configuring DDPG.

Performance Evaluation: A Comparative Study

When evaluating the performance of both algorithms through rigorous experimental setups, one must consider metrics such as convergence speed and overall reward maximization across varying scenarios. Numerous evaluations illustrate that while both algorithms possess their strengths, they cater distinctly to different types of problem landscapes within reinforcement learning frameworks. For example, research indicates that under conditions exhibiting high variability—such as simulated environments mimicking real-world unpredictability—experiments show that agents trained via PPO may consistently outperform those utilizing DDPG due to enhanced exploration capabilities and reduced variance.

Moreover, recent empirical findings suggest considerations beyond mere algorithmic performance; factors like implementation simplicity also play a crucial role in guiding practitioners’ decisions between these two approaches. The operational overhead involved with hyperparameter tuning and model architecture nuances can significantly impact project timelines and outcomes—a point highlighted through various case studies focusing on implementations across industries from robotics to game development.

Practical Application Insights: Tailoring Choices

Selecting between PPO and DDPG extends beyond theoretical understanding into practical application insights derived from prior experiences shared among researchers and industry professionals alike. Several investigations underscore the importance of aligning algorithm selection not only with technical specifications but also considering team expertise related to each approach’s intricacies—especially regarding debugging methodologies unique to each framework’s structure.

For instance, teams more familiar with policy gradient methods might gravitate towards PPO, appreciating its straightforward nature despite potential limitations on continuous state-action scenarios inherent with certain robotics applications compared against DDPG*. Ultimately deciding which path leads practitioners toward optimal results hinges upon assessing specific task demands coupled with organizational capacities—the crux being adaptability within fast-evolving technological landscapes championed by reinforced learning practices today.

By synthesizing knowledge accrued from numerous comparisons alongside direct experiences tailored around distinct use cases involving either PPO or DGP*, practitioners can make informed choices leading not just towards successful implementations but fostering innovation across automated systems leveraging AI advancements efficiently.

In the realm of robotic control, practitioners often face a critical decision: which reinforcement learning algorithm—PPO (Proximal Policy Optimization) or DDPG (Deep Deterministic Policy Gradient)—will yield superior performance for their specific tasks. This comparative study aims to dissect the methodologies and advantages inherent in both algorithms, thereby equipping readers with essential insights into optimal application scenarios.

The first notable difference between PPO and DDPG lies in their training stability and sample efficiency. While PPO is designed to be more stable due to its clipped objective function that prevents drastic policy updates, it may require more samples for convergence compared to DDPG, which excels in continuous action spaces. In practice, this means that when faced with environments demanding high-dimensional continuous actions, utilizing DDPG might lead to faster learning outcomes despite potentially less stable training phases. Therefore, understanding these operational mechanics is crucial for selecting an appropriate algorithm based on task characteristics.

Another aspect worth examining is how each algorithm handles exploration versus exploitation trade-offs during training. In general, _PPO’s robust architecture allows it to maintain a balance through stochastic policies, making it particularly effective in environments where diverse strategies are beneficial. On the other hand, because DDPG. relies on deterministic policy gradients, it can experience challenges related to exploration if not properly tuned—leading practitioners toward incorporating additional exploration techniques such as Ornstein-Uhlenbeck noise or epsilon-greedy strategies. The choice between these approaches ultimately hinges on whether one prioritizes exploratory behavior or refined exploitative actions within robotic control systems.

Finally, empirical data from experiments across various robotic environments reveal that neither algorithm universally outperforms the other; instead, performance evaluation indicates distinct strengths under differing conditions. For instance, while some control tasks may benefit significantly from the adaptability of PPO, others requiring precise continuous controls may find greater success using DDPG. Thus conducting a thorough analysis based on specific task requirements becomes invaluable for optimizing results in real-world applications.

FAQ:

Q: How do I choose between PPO and DDPG for my robotic project?

A: Choosing between PPO and DDPG depends largely on your project’s specific requirements regarding action space dimensions and desired stability levels during training.

Q: What are key considerations when implementing PPO or DDPG?

A: Key considerations include understanding each algorithm’s handling of exploration-exploitation trade-offs and recognizing their sample efficiency differences based on your particular control tasks.

Q: Can either PPO or DDPG handle all types of reinforcement learning problems?

A: No single solution exists; both PPO and DDPG have unique strengths tailored towards certain applications within reinforcement learning frameworks used in robotics.